AWS Self-Hosted Deployment Requirements

Please contact support@runwhen.com to for production deployment sizing and recommendations.

Overview

This document outlines the requirements and infrastructure specifications for the Proof of Concept (POC) self-hosted deployment in an AWS environment.

During this process;

A dedicated EKS cluster to operate the RunWhen Platform will be deployed

One (or more) Private Runners will be deployed (via. Helm) into an existing EKS cluster

Container images will be synchronized to a private container registry (this step requires some initial customization)

This process will require (see details below):

A user workstation or bastion host for installation

AWS Account credentials

Public Internet Access

A Private container registry

A Route53 DNS subdomain

A supported LLM Endpoint & Model

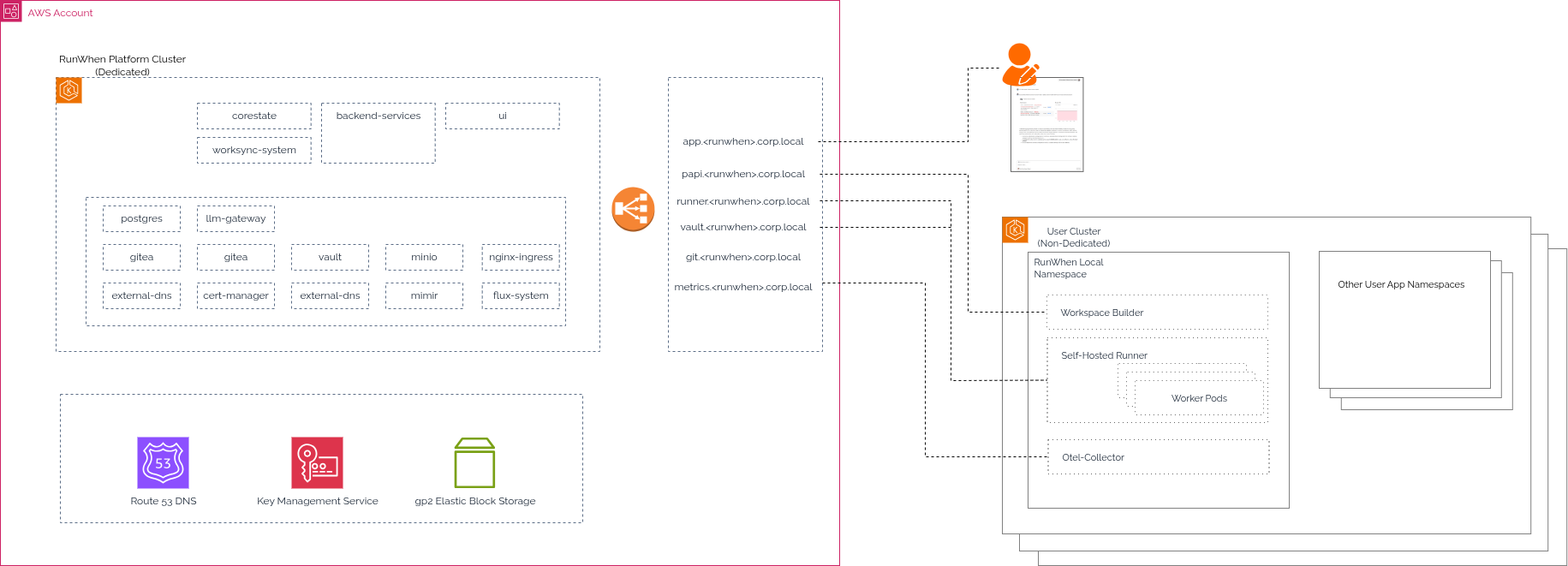

High-Level Architecture

A dedicated Amazon EKS cluster is deployed to operate the self-hosted RunWhen Platform components. During a Proof of Concept installation, application traffic is managed by a Classic Load Balancer (CLB) that is deployed by the ingress-nginx controller. Public DNS records are automatically created in your Route 53 subdomain by external-dns with certificates managed cert-manager. Secret management is provided by HashiCorp Vault, which uses AWS KMS for automatic unsealing.

This deployment consists of two components: the RunWhen Platform (described in this document) and RunWhen Local (the private runner - installed separately). The RunWhen Local Private Runner is registered to the RunWhen Platform and is responsible for secure ask execution within it’s secure zone. Each deployment of the RunWhen Local Private Runner must be able to communicate back to the RunWhen Platform.

Requirements

Workstation / Bastion Host

The workstation or bastion host is required to run the installation container. This container provides the necessary CLI tools and scripts to complete the EKS deployment process.

A desktop with Docker installed

Stable internet access to reach AWS API endpoints

Authenticated access to pull/push images into a private container registry

Network Requirements

Access to the following Public URLs are required for initial deployment:

RunWhen Platform

Container Registries

Name | URL |

|---|---|

Google Artifact Registry | us-docker.pkg.dev & us-west1-docker.pkg.dev |

GitHub Container Registry | |

Kubernetes Container Registry | |

Crunchy Container Registry | |

Quay Registry | |

DockerHub |

Helm Repositories

Name | URL |

|---|---|

bitnami | oci://registry-1.docker.io/bitnamicharts |

grafana | |

hashicorp | |

ingress-nginx | |

jetstack | |

prometheus-community | |

qdrant | |

runwhen-contrib | |

secrets-store-csi-driver | https://kubernetes-sigs.github.io/secrets-store-csi-driver/charts |

vault | |

gitea |

RunWhen Local Private Runner

CodeCollection Discovery URLs

api.cloudquery.io

github.com/runwhen-contrib

AWS Account Access

For the initial POC deployment, temporary AWS Account credentials will be used to run the automated setup:

AWS Access Key ID

AWS Secret Access Key

AWS Session Token (required for authentication; IAM Role / SSO-based deployments are not validated yet)

The Account credentials require the following permissions for the duration of the automated deployment. These permissions are necessary for eksctl and AWS CloudFormation to provision the EKS cluster and its dependencies. These credentials are not stored or used for ongoing operations after the installation is complete.

AWS Service | Access Level | Purpose |

CloudFormation | Full Access | To create, update, and delete stacks managed by |

EC2 | Full: Tagging Limited: List, Read, Write | To provision and manage EKS worker nodes, security groups, and launch templates |

EC2 Auto Scaling | Limited: List, Write | To create and manage Auto Scaling Groups for EKS nodes |

EKS | Full Access | To create and configure the EKS cluster control plane |

IAM | Limited: List, Read, Write, Permissions Management | To create the EKS cluster role, node instance role, and OIDC provider for IRSA |

Systems Manager | Limited: List, Read | Used by |

Using short-lived IAM user credentials is an interim measure for a proof of concept deployment.

AWS Route53 Hosted Zone

A pre-existing Route 53 Hosted Zone (e.g., runwhen.corp.local) will be provided during the installation process. It is ideal that this subdomain is fully resolvable prior to installation, as the cert-manager components need this to create and verify TLS certificates.

During installation, the external-dnscomponent will create the following DNS entries in Route53:

app.{SUBDOMAIN}.{DOMAIN} A

extdnsapp.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-app.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-gitea.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-minio-console.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-minio.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-papi.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-runner-mimir-tenant.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-runner.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-slack.{SUBDOMAIN}.{DOMAIN} TXT

extdnscname-vault.{SUBDOMAIN}.{DOMAIN} TXT

extdnsgitea.{SUBDOMAIN}.{DOMAIN} TXT

extdnsminio-console.{SUBDOMAIN}.{DOMAIN} TXT

extdnsminio.{SUBDOMAIN}.{DOMAIN} TXT

extdnspapi.{SUBDOMAIN}.{DOMAIN} TXT

extdnsrunner-mimir-tenant.{SUBDOMAIN}.{DOMAIN} TXT

extdnsrunner.{SUBDOMAIN}.{DOMAIN} TXT

extdnsslack.{SUBDOMAIN}.{DOMAIN} TXT

extdnsvault.{SUBDOMAIN}.{DOMAIN} TXT

gitea.{SUBDOMAIN}.{DOMAIN} A

minio-console.{SUBDOMAIN}.{DOMAIN} A

minio.{SUBDOMAIN}.{DOMAIN} A

papi.{SUBDOMAIN}.{DOMAIN} A

runner-mimir-tenant.{SUBDOMAIN}.{DOMAIN} A

runner.{SUBDOMAIN}.{DOMAIN} A

slack.{SUBDOMAIN}.{DOMAIN} A

vault.{SUBDOMAIN}.{DOMAIN} ATXT records prefixed with extdns are required for ExternalDNS to verify domain ownership.

Container Image Registry

A private container registry (e.g., Amazon ECR, Artifactory, Harbour) is required for the deployment. This registry needs to be accessible from the EKS cluster's VPC. During installation:

write credentials must be available on the workstation/bastion host to push images into the container registry

network access must exist to support pulling images from EKS nodes

Private Images

RunWhen Platform images will need to be pushed to a private registry/repository. The full repository path will be requested as a configuration variable during the installation process.

Public Images

Several components (such as ingress-nginx and cert-manager) use public container images and public helm charts. By default, the cluster is configured to pull these images directly from public registries on the internet.

Registry Authentication

Configuring authentication between the EKS cluster and your container registry is a key setup step. This process is managed on customer end, as the deployment automation does not handle registry credentials.

LLM Integration

For core functionalities like generating summaries and next steps, the platform requires a connection to a Large Language Model (LLM). You will need to provide your own LLM endpoint and credentials from a provider of your choice (e.g., OpenAI, Azure OpenAI, Anthropic).

The platform automatically scrubs all data to remove sensitive information before it is sent to the LLM. Communication is handled securely by the platform.

The connection is managed by a built-in LiteLLM proxy, which provides a flexible and standard interface to connect with most major LLM providers.

Detailed instructions for configuring your endpoint are available in our guide: Bring Your Own LLM

Infrastructure and Component Specifications

Additional technical details and notes related to a PoC installation are outlined below.

EKS Cluster & NodeGroup

Item | Default | Notes |

|---|---|---|

Region | us-east-1 | Configurable |

Instance Type | t3a.xlarge (4vCPU, 16GB RAM) | Configurable |

Scaling | 3-4 nodes | Configurable |

IP Address Requirements | Defaults to Amazon VPC CNI plugin | Requires minimum 110 IP Addresses. |

OIDC Enabled | True | Required for IAM and Service Account integration |

Networking

Item | Default | Notes |

|---|---|---|

Load Balancer | 1 Classic Load Balancer | Provisioned by the ingress-nginx controller |

DNS Integration | A and TXT records integrated into Route53 | Managed by |

TLS Certificates | Managed in-cluster with |

|

Storage

Item | Default | Notes |

|---|---|---|

Persistent Volumes | Defaults to | Default, or additional storage classes, can be configured post-installation |

Quota | Minimum 410GB | Minimum is specific for Proof of Concept installation only |

Secrets Management

Item | Default | Notes |

|---|---|---|

Secrets Management | HashiCorp Vault | Not configurable |

Seal Key Management | Auto unseal with AWS KMS | Not configurable |

Root Token | Auto-generated and shared with installation user | Not configurable |

IAM Roles for Service Accounts (IRSA)

The automated installer creates the following IAM roles for secure, passwordless access to AWS services from within the cluster.

Role | Purpose | Notes |

|---|---|---|

Vault-KMS Integration Role | Allows Vault to communicate with KMS for automatically unsealing the instance | |

| Allows ExternalDNS to manage Route 53 records for the designated subdomain |

HashiCorp Vault and KMS Integration

Vault communicates with the KMS service provided by AWS to store the encryption keys

With IRSA, a new KMS key, Policy and Role will be created.

KMS key alias:

runwhenvault-${CLUSTER}Role Name:

vault-kms-role-${CLUSTER}Policy Name:

RunWhenVaultKMSUnsealPolicy-${CLUSTER}Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:DescribeKey"

],

"Resource": "${KMS_KEY_ARN}"

}

]

}

Role will assume the identity of federated cluster OIDC for

vault-sakubernetes service account:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_CONDITION_KEY}": "system:serviceaccount:vault:vault-sa"

}

}

}

]

}

external-dns and Route53 Integration

external-dnscommunicates with Route53 service provided by AWS to create/update/delete DNS recordsWith IRSA, a new Policy and Role will be created.

Role Name:

external-dns-role-${CLUSTER}Policy Name:

RunWhenExternalDNSRoute53Policy-${CLUSTER}Policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/${ZONE_ID}"

]

},

{

"Effect": "Allow",

"Action": [

"route53:ListHostedZones",

"route53:ListResourceRecordSets"

],

"Resource": "*"

}

]Role will assume the identity of federated cluster OIDC for

external-dnskubernetes service account:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${OIDC_CONDITION_KEY}": "system:serviceaccount:external-dns:external-dns"

}

}

}

]

}

Responsibilities Matrix

Area | Our Responsibility (Automated Deployment) | Customer Responsibility (Prerequisites & Management) |

AWS Access | Use the provided credentials for one-time setup. | Provide temporary IAM user credentials with the required permissions. |

EKS Cluster & Nodes | Provision and configure the EKS cluster, control plane, and node groups. | Ensure sufficient service quotas (EC2, etc.) are available. |

Container Images & Registry | Use the customer-provided registry path in Kubernetes manifests to reference images. |

|

Networking & Ingress | Deploy ingress-nginx which provisions a classic ELB. | N/A |

Storage | Install the EBS CSI driver and configure the gp2 storage class for PVCs. | Verify EBS volume quotas and limits. |

Domain & DNS | Create necessary DNS records in the provided zone. | Provide a Route 53 Hosted Zone and delegate the domain. |

TLS Certificates | Set up `cert-manager` with a default `letsencrypt-staging` issuer. | Configure a production certificate issuer post-deployment. |

Secrets (Vault) | Install and configure Vault with KMS auto-unseal. | Securely capture and store the one-time Initial Root Token. |

LLM Integration | Provide a pre-configured LiteLLM proxy and built-in data scrubbing modules. | Provide a functioning LLM endpoint and API key. Configure these credentials as specified. |

Observability | N/A | Deploy and manage any desired monitoring or logging stacks. |

Local Runner Connectivity | Provide a stable, addressable endpoint URL for the platform. | Ensure the network path and firewall rules are configured to allow the runner to reach the platform's endpoint URL (outbound HTTPS/443). |

Cost Considerations

A minimal high-availability deployment is estimated as follows:

Item | Estimated Cost (USD) | Notes |

|---|---|---|

EKS Control Plane | $73/month | |

EC2 Nodes | $360/month | 3x - t3a.xlarge on-demand |

Classic Load Balancer | $18/month | |

EBS Storage | $41/GB/Month | Varies $0.10/GB/Month |

Total | ~$500/month | Estimated minimum PoC Cost |

Deployment Instructions

RunWhen Platform Installation

Please contact support@runwhen.com for all self-hosted installation steps and access to private images.

Ensure requirements are met

Run the provided Docker image with AWS credentials mounted/injected in config file

The deployment process will:

Provision required AWS resources via AWS CloudFormation

Create an EKS cluster with configured node groups

Deploy supporting services (Ingress, Vault, storage, etc.)

Once complete, verify

ELB is provisioned and reachable

Domain (e.g. app.runwhen.corp.local ) resolves correctly to the ELB

Pods are running and healthy `(kubectl get pods -A)

Next Steps

With the platform installation complete;

Create a user account/log in

Proceed to Installing RunWhen Local (Private Runner)

Known Limitations

IAM Role / SSO-based deployments are not validated yet

Installations have been tested in

us-east-1Monitoring / Alerting integrations require post-deployment integration

Internet access is required for pulling public container images by default